Running TensorFlow on Android Things

28 Aug 2017In May of 2017 Google announced its TensorFlow library’s version dedicated for mobile devices, back then called TensorFlow Lite. Later they rebranded it as TensorFlow Mobile. Google used a process called Quantization, which is basically a way to represent neural network’s structure using lower than 32bit floating point computer formats. In the case of TensorFlow Mobile, the 8bit fixed point format is being used.

The whole point of this library is to use it on low-power devices with limited storage since “traditional” deep neural nets can be both huge in size (hundreds of megabytes) and very demanding in computationally (which requires powerful hardware and a lot of energy).

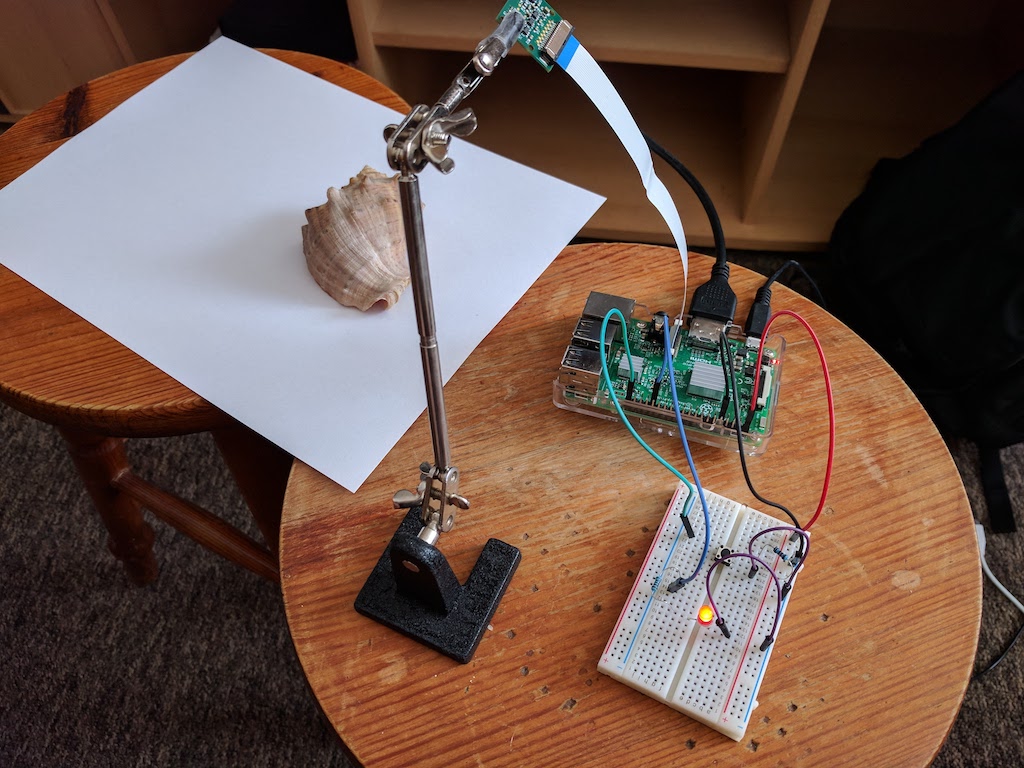

I decided to give it a try on my Raspberry Pi 3 board running Android Things developer preview 4.1. The code I used and the schematics are available on my GitHub. The network used is pre trained Google Inception model.

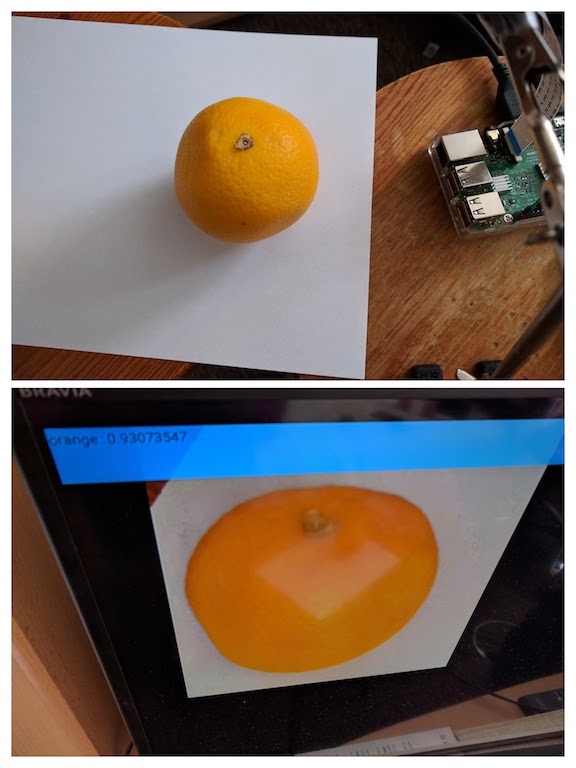

Here is the setup I used. The camera is an old 5-megapixel module from 2013. The picture quality isn’t too great by today’s standards. I used slightly modified soldering gripper as a makeshift tripod.

Quick and dirty setup

Quick and dirty setup

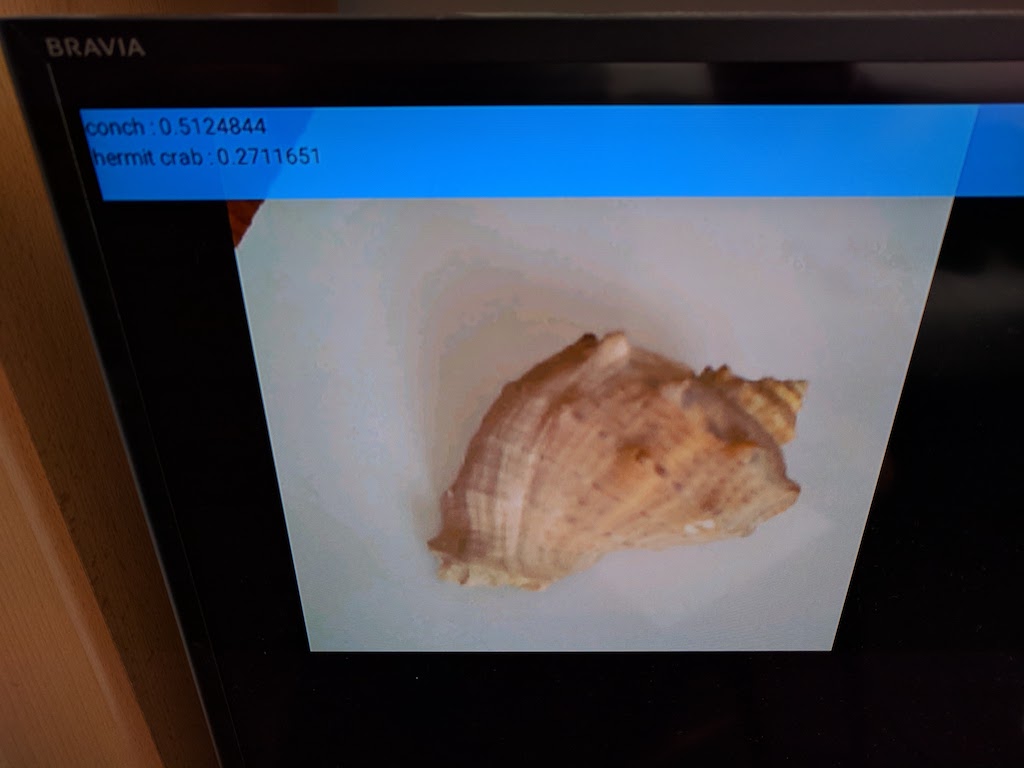

It recognizes the conch shell with 51% certainty

It recognizes the conch shell with 51% certainty

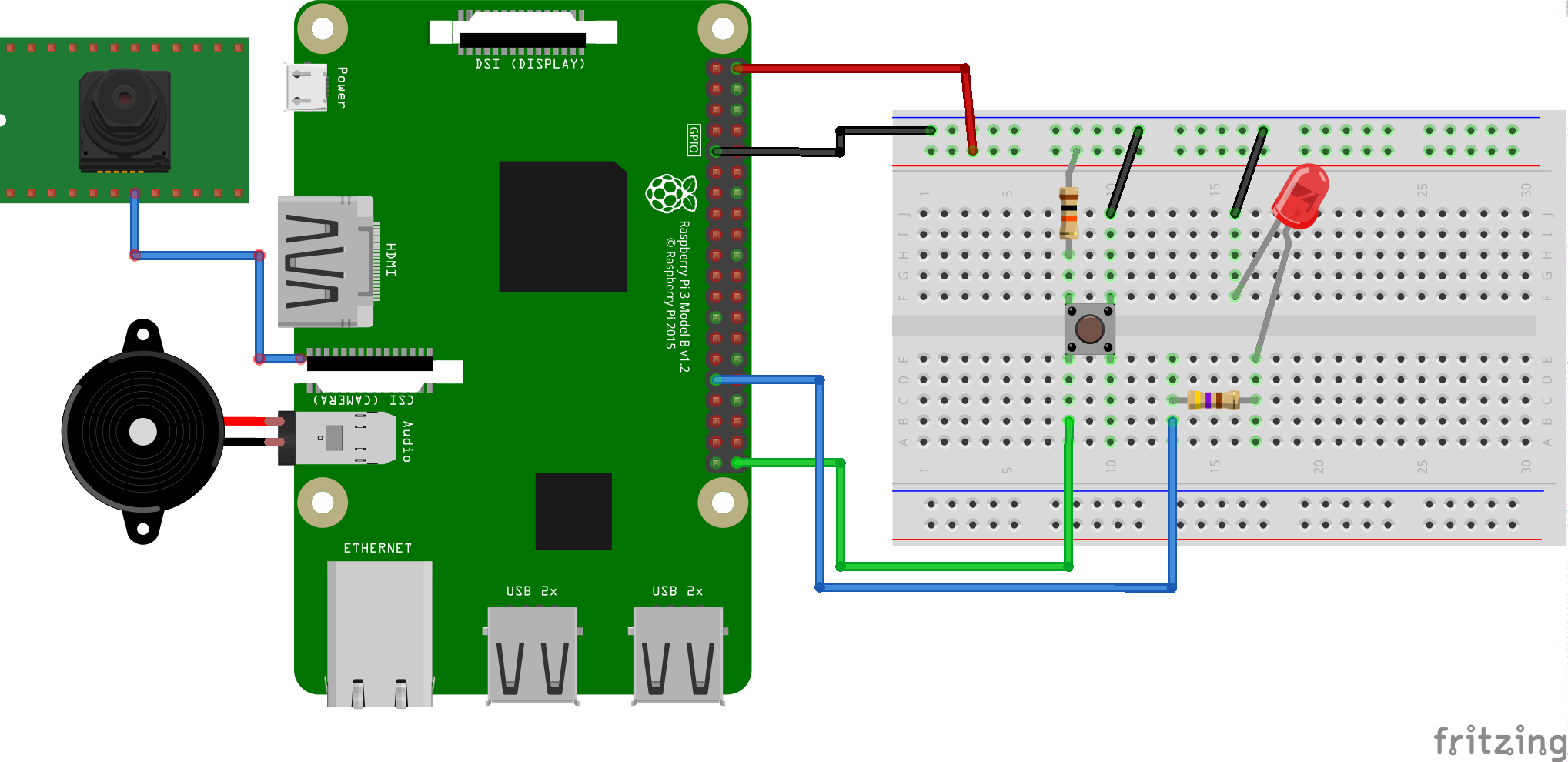

Schematics of the project:

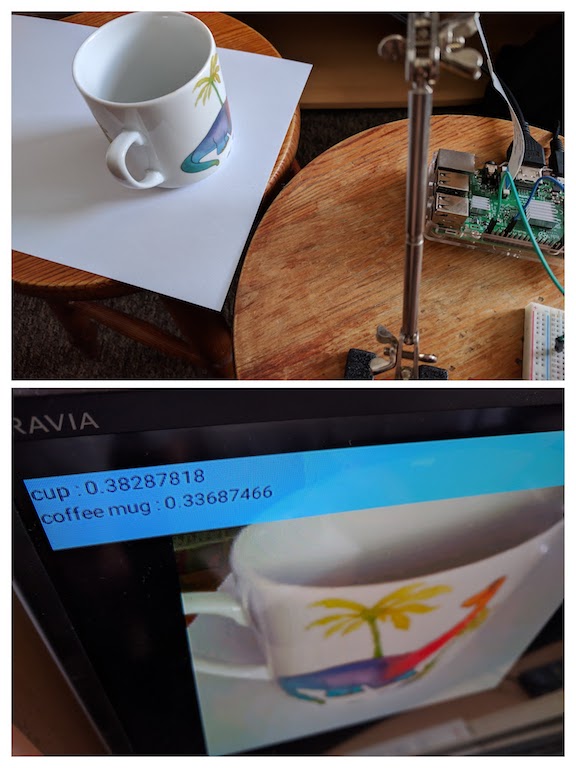

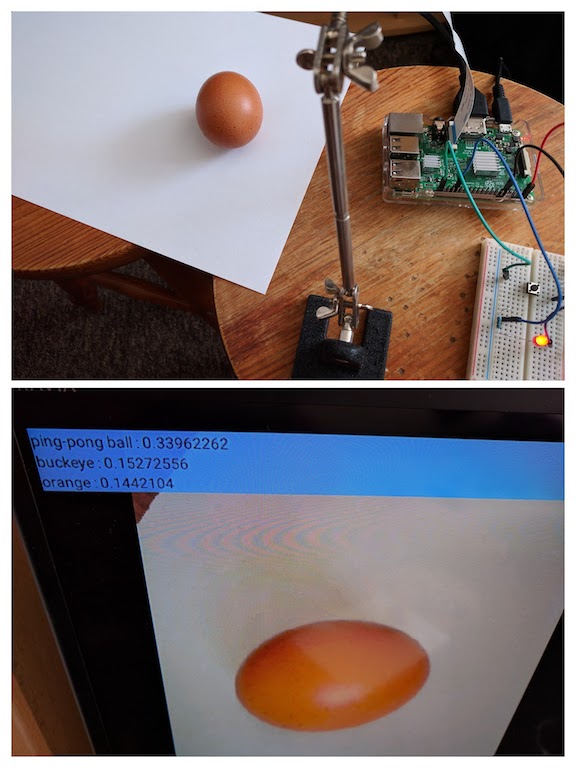

Here are some other examples of the network at work:

Of course, the network isn’t perfect:

The whole app’s size is ~70MB. Keep in mind all the app’s work is done offline, contained in a tiny RP3 body. It takes under one second to do the inference on the picture. I think future looks particularly promising with the advances in hardware (new cheaper and more powerful IoT platforms) and machine learning algorithms.